In Business, Official Blog, Tech-BLOG, Technical

Let us first know what is a Paper battery?

A paper battery is a flexible, ultra-thin energy storage and production device formed by combining carbon nanotube s with a conventional sheet of cellulose-based paper. A paper battery acts as both a high-energy battery and supercapacitor, combining two components that are separate in traditional electronics. This combination allows the battery to provide both long-term, steady power production and bursts of energy.In addition to being unusually thin, paper batteries are flexible and environmentally-friendly, allowing integration into a wide range of products. Their functioning is similar to conventional chemical batteries with the important difference that they are non-corrosive and do not require extensive housing.

How does Paper Battery work?

The conventional rechargeable batteries which we use in our day-to-day life consist of various separating components which are used for producing electrons with the chemical reaction of a metal and electrolyte. If once the paper of the battery is dipped in ion-based liquid, then the battery starts working i.e.,electricity is generated?by the movement of electrons from cathode terminal to anode terminal. This is due to the chemical reaction between the electrodes of paper battery and liquid. Due to the quick flow of the ions within a few seconds (10sec) energy will be stored in the paper-electrode during the recharging. By stacking various paper-batteries up on each other, the output of the paper battery can be increased.As the paper batteries are connected each other very closely for increasing their output, there is a chance of occurring short between the?anode terminal and cathode terminal. If once the anode terminal contacts with cathode terminal, then there will be no flow of current in the external circuit. Thus, to avoid the short circuit between anode and cathode a barrier or separator is needed, which can be fulfilled by the paper separator.

“Technology is driving the innovation. Technology is driving the creativity”

What are Paper Battery Properties?

The properties of the paper battery can be recognized from the properties of cellulose such as :-

- excellent porosity

- biodegradability

- non-toxic

- recyclability

- high-tensile strength

- good absorption capacity

- low-shear strength

also from the properties of carbon nanotubes such as low mass density, flexibility, high packing density, lightness, better electrical conductivity than silicon, thin (around 0.5 to 0.7mm), and low resistance.

Paper Battery Manufacturing:-

One method is to grow the nanotubes on a silicon substrate and then filling the gaps in the matrix with cellulose. When the matrix is dried, the material can be removed the substrate. Combining the sheets together with the cellulose sides facing inwards, the battery structure is formed. The electrolyte is added to the structure.

“Technology will not replace great teachers but technology in the hands of great teachers can be transformational”

Applications of Paper Batteries:

With the developing technologies and reduction in the cost of cathode nanotubes, these batteries find applications in the following fields:

- Nanotubes used for Paper Battery

Paper Battery= Paper (Cellulose) + Carbon Nanotubes

The paper battery can be used for various applications as it facilitates advantages such as folding, twisting, molding, crumpling, shaping, and cutting without affecting on its efficiency. As the paper batteries are the combination of cellulose paper and carbon nanotubes, which facilitates advantages of long-term usage, steady power, and bursts of energy. These types of paper batteries are estimated to use for powering the next generation vehicles and medical devices.

- Paper batteries in electronics

Paper batteries are used mainly in many electronic devices, such as mobile phones, laptop batteries, calculators, digital cameras and also in wireless communication devices like mouse, Bluetooth, keyboard, speakers, and headsets.

- Paper batteries in medical sciences

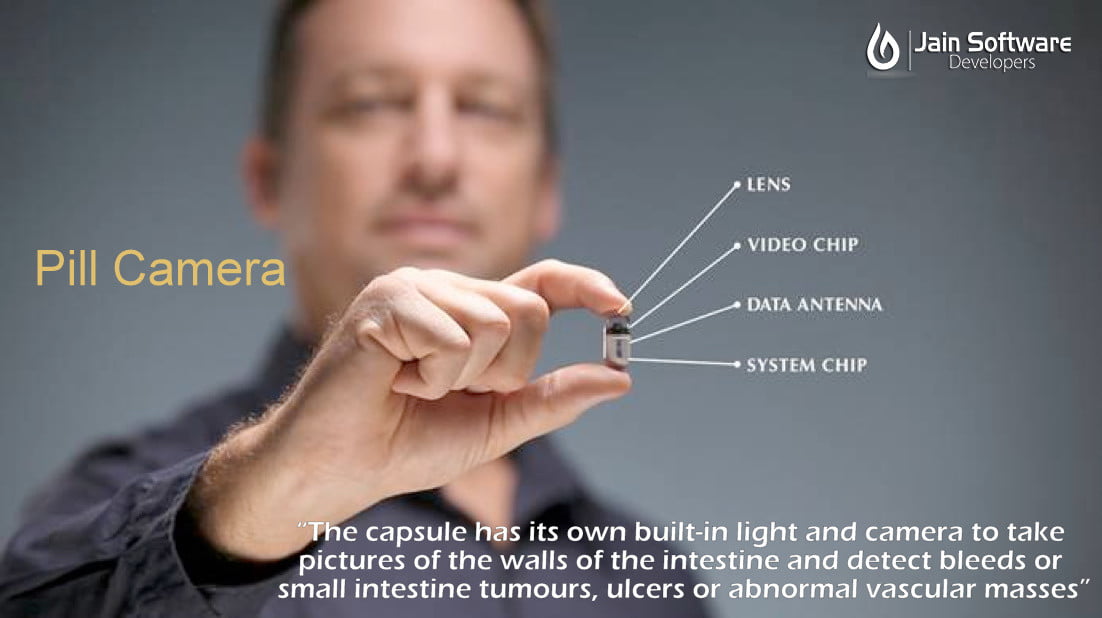

Paper batteries are used in the medical field such as for making pacemakers for the heart, artificial tissues, drug delivery systems, cosmetics and in Biosensors.

- Paper batteries in automobiles and aircraft

Paper batteries are used in automobiles and aircraft such as in lightweight, guided missiles, hybrid car batteries, long air flights and in satellite programs for powering electronic devices.

This is all about the paper battery with its working principles and applications.

These batteries have the potential adaptability to power the next generation electronic appliances, medical devices and hybrid vehicles. So, these batteries could solve all the problems associated with conventional electrical energy storage devices. Furthermore, for any queries, regarding this article or any other?electrical projects, you can leave your comments, suggestions by commenting in the comment section below.

- Electrochemical Batteries

Electrochemical batteries can be modified to integrate the use of paper. An electrochemical battery typically uses two metals, separated into two chambers and connected by a bridge or a membrane which permits the exchange of electrons between the two metals, thereby producing energy. Paper can be integrated into electrochemical batteries by depositing the electrode onto the paper and by using paper to contain the fluid used to activate the battery. The paper that has been patterned can also be used in electrochemical batteries. This is done to make the battery more compatible with paper electronics. These batteries tend to produce low voltage and operate for short periods of time, but they can be connected in series to increase their output and capacity. Paper batteries of this type can be activated with bodily fluids which makes them very useful in the healthcare field such as single-use medical devices or tests for specific diseases. A battery of this type has been developed with a longer life to power?point of care?devices for the healthcare industry. The device used a paper battery made using a magnesium foil anode and a silver cathode has been used to detect diseases in patients such as kidney cancer, liver cancer, and osteoblastic bone cancer. The paper was patterned using wax printing and is able to be easily disposed of. Furthermore, this battery was developed at a low cost and has other practical application.

Paper can be used in lithium-ion batteries as regular, commercial paper, or paper enhanced with single-walled carbon nanotubes. The enhanced paper is used as the electrode and as the separator which results in a sturdy, flexible battery that has great performance capabilities such as good?cycling, great efficiency, and good reversibility. Using paper as a separator is more effective than using plastic. The process of enhancing the paper, however, can be complicated and costly, depending on the materials used. A carbon nanotube and silver nanowire film can be used to coat regular paper to create a simpler and less expensive separator and battery support. The conductive paper can also be used to replace traditionally used metallic chemicals. The resulting battery performs well while simplifying the manufacturing process and reducing the cost. Lithium-ion paper batteries are flexible, durable, rechargeable, and produce significantly more power than electrochemical batteries. In spite of these advantages, there are still some drawbacks. In order for the paper to be integrated with the Li-ion battery, complex layering and insulating techniques are required for the battery to function as desired. One reason these complex techniques are used is to strengthen the paper used so that it does not tear as easily. This contributes to the overall strength and flexibility of the battery. These techniques require time, training, and costly materials. Additionally, the individual materials required are not environmentally friendly and require specific disposal procedures. Paper lithium-ion batteries would be best suited for applications requiring a substantial amount of energy over an extended period of time. Lithium-ion paper batteries can be composed of carbon nanotubes and a cellulose-based membrane and produce good results but at a high price tag. Other researchers have been successful using carbon paper manufactured from pyrolyzed filter paper. The paper is inserted in between the electrode and cathode.The use of a carbon paper as an interlayer in Li-S batteries improves the batteries efficiency and capacity. The carbon paper increases the contact area between the cathode and the electrode which allows for greater flow of electrons. The pores in the paper allow the electrons to travel easily while preventing the anode and the cathode from being in contact with one another. This translates into the greater output, battery capacity and cycle stability; these are improvements to conventional Li-S batteries. The carbon paper is made from?pyrolyzed?filter paper which is inexpensive to make and performs like multi-walled carbon nanotube paper used as a battery.

Biofuel cells operate similarly to electrochemical batteries, except that they utilize components such as sugar, ethanol, pyruvate, and lactate, instead of metals to facilitate redox reactions to produce electrical energy. The enhanced paper is used to contain and separate the positive and negative components of the biofuel cell. This paper biofuel cell started up much more quickly than a conventional biofuel cell since the porous paper was able to absorb the positive biofuel and promote the attachment of bacteria to the positive biofuel. This battery capable of producing a significant amount of power after being activated by a wide range of liquids and then be disposed of. Some development must take place since some components are toxic and expensive.

Naturally occurring electrolytes might allow?biocompatible?batteries for use on or within living bodies. ?Paper batteries described by a researcher as a way to power a small device such as a pacemaker without introducing any harsh chemicals such as the kind that is typically found in batteries in the body.

Their ability to use electrolytes in the blood makes them potentially useful for medical devices such as?pacemakers,?medical diagnostic?equipment, and drug delivery?transdermal patches. German healthcare company KSW Microtech is using the material to power?blood?supply temperature monitoring.

Paper battery technology can be used in?supercapacitors. Supercapacitors operate and are manufactured similarly to electrochemical batteries, but are generally capable of greater performance and are able to be recharged. Paper or enhanced paper can be used to develop thin, flexible supercapacitors that are lightweight less expensive. The paper that has been enhanced with carbon nanotubes is generally preferred over regular paper because it has increased strength and allows for easier transfer of electrons between the two metals. The electrolyte and the electrode are embedded into the paper which produces a flexible paper supercapacitor that can compete with some commercial supercapacitors produced today. A paper supercapacitor would be well suited for a high power application.

Nanogenerators are a more recent device that converts mechanical energy to electrical energy. Paper is desirable as a component of nanogenerators for the same reasons discussed above. Such devices are able to capture movement, such as body movement, and convert that energy into electrical energy that could power LED lights, for example.

“When you take technology and mix it with art, you always come up with something innovative”

Advantages of Paper Battery:-?

The composition of these batteries is what sets them apart from traditional batteries.

- Paper is abundant and self-sustaining, which makes paper cheap. Disposing of paper is also inexpensive since paper is combustible as well as biodegradable.

- Using paper gives the battery a great degree of flexibility.

- The battery can be bent or wrapped around objects instead of requiring a fixed casing.

- Being a thin, flat sheet, the paper battery can easily fit into tight places, reducing the size and weight of the device it powers.

- The use of paper increases the electron flow which is well suited for high-performance applications. Paper allows for capillary action so fluids in batteries, such as electrolytes, can be moved without the use of an external pump.

- Using paper in batteries increases the surface area that can be used integrate reagents. The paper used in paper batteries can be supplemented to improve its performance characteristics.

- Patterning techniques such as photolithography, wax printing, and laser micromachining are used to create hydrophobic and hydrophilic sections on the paper to create a pathway to direct the capillary action of the fluids used in batteries.

- Similar techniques can be used to create electrical pathways on paper to create paper electrical devices and can integrate paper energy storage.

Disadvantages

Although the advantages of paper batteries are quite impressive, many of the components that make them great, such as carbon nanotubes and pattering, are complicated and expensive.

“The science of today is the Technology of future”