Emerging cyber threats you should be concerned about?

In Official Blog, Tech-BLOG, Tech-Forum, Technical, Workplace

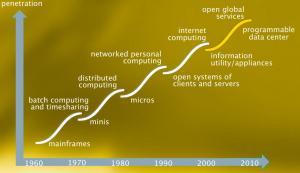

In this digital world, we are obsessed with the technology. Technology is changing every second. With the emergence of technology, the threats in the cybersecurity are increasing every day.

In this digital world, we are obsessed with the technology. Technology is changing every second. With the emergence of technology, the threats in the cybersecurity are increasing every day.

Cybersecurity comprises technologies, processes, and controls that are designed to protect systems, networks, and data from cyber-attacks. Effective cybersecurity reduces the risk of cyber-attacks and protects organizations and individuals from the unauthorized exploitation of systems, networks, and technologies.

Consequences of a Cyber-attack

Cyber-attacks can disrupt and cause considerable financial and reputational damage to even the most resilient organization. If you suffer a cyber-attack, you stand to lose assets, reputation, and business, and potentially face regulatory fines and litigation – as well as the costs of remediation.

Currently, we are facing a lot of cyber-attacks in terms of monetary fraud and information breaches.

Now a day’s common people have no idea about the potential threats. They often get exposed to social engineering. They often give their information to any third party who is having malicious intent. As a result, they suffer the loss in terms of money, data or information.

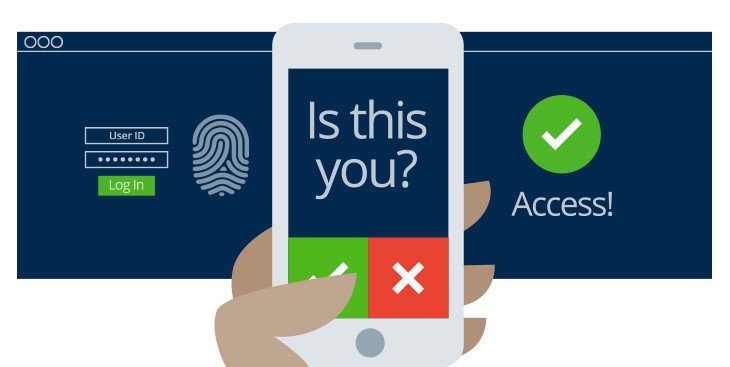

In India, most of the information of an individual is linked with aadhar card. It provides a unique identification number to the individual. It has the thumbprint and fingerprints of a person. The bank account is also linked with aadhar card. So it is our responsibility to secure that information from the hackers.

Â

To know more about hacking readÂ

Â

Threats to organization

Threats to organization

All Internet-facing organizations are at risk of attack. And it’s not a question of if you’ll be attacked, but when you’ll be attacked. The majority of cyber-attacks are automated and indiscriminate, exploiting known vulnerabilities rather than targeting specific organizations. Your organization could be being breached right now and you might not even be aware.

Up to 70% of emails today are spam, and the vast majority of these still involve phishing scams. Other common hacking threats include ransomware, malware, and Distributed denial-of-service (DDoS) attacks, all of which have been responsible for major data breaches in recent months and which can leave both company and customer data vulnerable to cyber-criminals. A massive 93% of data breaches are motivated by financial gain, according to a recent Verizon report. Hackers aim for the highest return for the least amount of effort, which is why smaller businesses with lax security are often successfully targeted.

Banking, Financial Services and Insurance (BFSI): The BFSI sector is under growing pressure to update its legacy systems to compete with new digital-savvy competitors. The value of the customer data they hold has grown as consumers demand a more convenient and personalized service, but trust is essential. Some 50% of customers would consider switching banks if theirs suffered a cyber-attack, while 47% would “lose complete trust†in them, according to a recent study. A number of major banks around the world have already been subject to high-profile cyber-attacks suggesting that the sector needs to improve its approach to risk. Financial firms should invest in security applications that are able to adapt to the future of banking to ensure comprehensive, around-the-clock security. Shared Ledgers will feature prominently in the future of the BFSI sector, the best-known example of which is Blockchain, which forms the backbone of cryptocurrency Bitcoin. The blockchain is a database that provides a permanent record of transactions. It leaves an undisputed audit trail that can’t be tampered with, meaning it could completely transform security in the BFSI sector.

Banking, Financial Services and Insurance (BFSI): The BFSI sector is under growing pressure to update its legacy systems to compete with new digital-savvy competitors. The value of the customer data they hold has grown as consumers demand a more convenient and personalized service, but trust is essential. Some 50% of customers would consider switching banks if theirs suffered a cyber-attack, while 47% would “lose complete trust†in them, according to a recent study. A number of major banks around the world have already been subject to high-profile cyber-attacks suggesting that the sector needs to improve its approach to risk. Financial firms should invest in security applications that are able to adapt to the future of banking to ensure comprehensive, around-the-clock security. Shared Ledgers will feature prominently in the future of the BFSI sector, the best-known example of which is Blockchain, which forms the backbone of cryptocurrency Bitcoin. The blockchain is a database that provides a permanent record of transactions. It leaves an undisputed audit trail that can’t be tampered with, meaning it could completely transform security in the BFSI sector.

AI integrated attacks

AI is a double-edged sword as 91% of security professionals are concerned that hackers will use AI to launch even more sophisticated cyber-attacks.

AI can be used to automate the collection of certain information — perhaps relating to a specific organization — which may be sourced from support forums, code repositories, social media platforms and more. Additionally, AI may be able to assist hackers when it comes to cracking passwords by narrowing down the number of probable passwords based on geography, demographics and other such factors.

Telecom: There is a significant cybersecurity risk for telecom firms as carriers of internet data, and therefore a huge responsibility. Providers need to integrate cybersecurity measures into network hardware, software, applications and end-user devices in order to minimize the risk of a serious data breach, which could leave customer credentials and communications vulnerable. Consumers are increasingly careful about who they entrust their personal data to, providing a strong opportunity for networks that offer additional security services. In addition, a collaboration between rival operators could lead to greater resilience against cyber attackers.

As technologies progress, the skills needed to deal with cyber-security needs are changing. The challenge is to train cyber-security professionals so that they can deal with threats as quickly as possible and also adapt their skills as needed. There will be some 3.5 million unfilled cyber-security roles by 2021.

Research your audience: Designing a logo is not just about creating an appealing visual. Like the overall color scheme and design of your site, your logo sets your brand apart from the competition and shows people that you’re a legitimate business. Logos are a critical part of the modern visual landscape. Throw yourself into the brand. Save all your sketches. Research online. Create mind maps or mood boards. Build a board and tear it apart. Stop with the clichés. Know your customer need. Give him/her a variety of different design to think on. Ask your customer about the message he/she want to convey via the logo. Don’t just be a designer – be a good one. Designing an effective logo is not a quick or easy process. What it requires is through research, thought, care and attention to ensure the final logo design targets the correct market and broadcasts the right message. A poorly designed logo will have a negative effect on the perception of your business; however, a carefully designed logo can transform a business by attracting the right people.

Research your audience: Designing a logo is not just about creating an appealing visual. Like the overall color scheme and design of your site, your logo sets your brand apart from the competition and shows people that you’re a legitimate business. Logos are a critical part of the modern visual landscape. Throw yourself into the brand. Save all your sketches. Research online. Create mind maps or mood boards. Build a board and tear it apart. Stop with the clichés. Know your customer need. Give him/her a variety of different design to think on. Ask your customer about the message he/she want to convey via the logo. Don’t just be a designer – be a good one. Designing an effective logo is not a quick or easy process. What it requires is through research, thought, care and attention to ensure the final logo design targets the correct market and broadcasts the right message. A poorly designed logo will have a negative effect on the perception of your business; however, a carefully designed logo can transform a business by attracting the right people. Once the idea has been explored on paper you should begin to work on the designs using software called Adobe Illustrator, which is a vector-based software program, which means the artwork produced is scalable and will never lose quality. You should continue to explore and experiment with the ideas even during this state, to ensure the idea produced is presented in its best possible light. Use vector shapes in Adobe Illustrator CC to create a logo that looks good onscreen and in print. The best part about vector art is that it scales to any size. It can be a small business card or a large billboards vector art can be resized and it will not lose its quality. Try to know about the golden circle ratio. It will help you to get a better understanding of various designs.

Once the idea has been explored on paper you should begin to work on the designs using software called Adobe Illustrator, which is a vector-based software program, which means the artwork produced is scalable and will never lose quality. You should continue to explore and experiment with the ideas even during this state, to ensure the idea produced is presented in its best possible light. Use vector shapes in Adobe Illustrator CC to create a logo that looks good onscreen and in print. The best part about vector art is that it scales to any size. It can be a small business card or a large billboards vector art can be resized and it will not lose its quality. Try to know about the golden circle ratio. It will help you to get a better understanding of various designs. Designing the Idea and presenting: Once designs are ready to present you should create a PDF document which will display the logo designs created, with images of the designs in real life examples, along with supporting notes explaining the decisions made. We only present designs which we are confident in, and will give you my opinion on which you believe will be most suitable for your client’s business. You should leave the final choice to your client, and if there is any possibility of improvement in design, the design could be improved or modified to better meet the objectives, changes can be made where necessary.

Designing the Idea and presenting: Once designs are ready to present you should create a PDF document which will display the logo designs created, with images of the designs in real life examples, along with supporting notes explaining the decisions made. We only present designs which we are confident in, and will give you my opinion on which you believe will be most suitable for your client’s business. You should leave the final choice to your client, and if there is any possibility of improvement in design, the design could be improved or modified to better meet the objectives, changes can be made where necessary.